Improving Site Performance

Our site hasn't really been our focus over the past months, instead I've been concentrating on acquiring new network locations, while Will has been improving our server setup and maintenance architecture (we've blogged about Setting up Proxy Servers and Managing 30+ servers previously). More recently we've been taking a harder look at how the site performs, both for us, and for our users, and found it lacking.

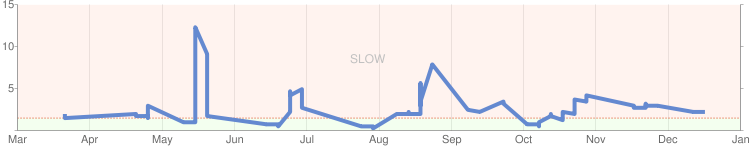

Examining our main page's performance with XHGui quickly revealed that a considerable amount of time was being spent generating the information displayed in the footer (server list, server country list, and proxied traffic). This data was shuffled off to APC's user storage mechanism removing it from the average page load entirely. Google Webmaster Tools still reported a startlingly high average pageload time:

This was quite surprising as the site seemed pretty snappy overall. Further investigation showed that server specific pages loaded more slowly (3-5 seconds!). Since our goal is to provide proxies for GeoIP testing, having server specific pages load slowly is sub-optimal. Looking at the pages with YSlow and Page Speed reveals that the real culprit is the embedded Google Map. Switching to use a static map greatly reduced page load time (to ~800ms). This also reduced functionality, as the map is no longer dynamic, but we plan on switching to a combined static & dynamic system in the future.

If you're interested in front end performance, High Performance Web Sites and Even Faster Web Sites are invaluable.

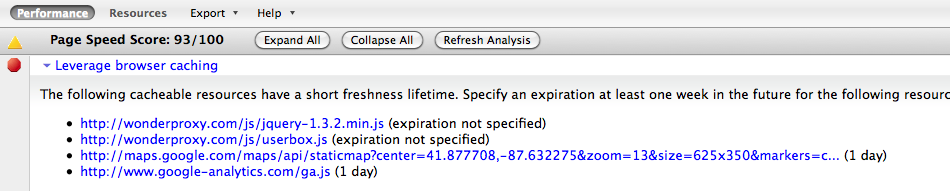

Reading through the suggestions from YSlow a bit more closely, then diving into the Apache documentation I also managed to find a few quick gains by configuring our web server to do a bit more work for us:

ExpiresActive On

ExpiresByType image/png "access plus 1 month"

ExpiresByType text/css "access plus 1 month"

ExpiresByType image/jpeg "acces plus 1 month"

<Location />

SetOutputFilter DEFLATE

BrowserMatch ^Mozilla/4 gzip-only-text/html

BrowserMatch ^Mozilla/4\.0[678] no-gzip

BrowserMatch \bMSI[E] !no-gzip !gzip-only-text/html

SetEnvIfNoCase Request_URI .(?:gif|jpe?g|png)$ no-gzip dont-vary

</Location>

YSlow will tell you to turn off eTags under the default rule-set. If you're running with a single web server this is bad advice. You may want to select the Small Site or Blog ruleset to get the most out of the tool. Moving forward we may decide to re-organize our javascript code to make expiry rules for it easy (we can't set distant expiry for all javascript documents as our live status bar relies on it), for now we'll leave them as is. We're happy with our new scores:

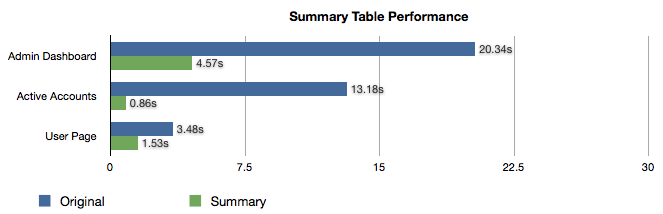

Having sorted out the low hanging fruit on the front end, I looked at the account pages and the administrative tools we're using. Performance there was abysmal, with some pages taking at least 10 seconds to load. The pages with the worst performance were the ones displaying any sort of usage statistic; the very worst being ones that displayed aggregate statistics for all users. Looking at our usage table built on the squid logs it has nearly a million rows. Despite being indexed there's still a lot of data to aggregate and sum.

With an eye toward improving performance I decided to build some summary tables. The first one aggregates usage by user, by server, by day. This summary table was roughly 1/23rd of the original usage table. Makes sense since it rolled up the 24 hourly reports into one. This table is considerably quicker to query, and I started rolling it out to various portions of the admin section immediately.

While these numbers are still rather pathetic, remember that these are admin actions, not forward facing pages. Optimizing for these would be folly; the time would be much better spent working on outward facing pages read by users and search engines alike. The significant increase here will simply make managing the system a speedier task.

Knowing that the summary table is going to be useful, we need to keep it up to date. To accomplish this task we're running this query after every log rotation (a process described in our post Squid log parsing for proxy billing). Luckily I've got friends at Percona (authors of the MySQL Performance Blog) who gave me a hand with crafting the query:

INSERT INTO sum_usage_daily SELECT

`user_id`,

`group_id`,

server_id,

date(`timestamp`)AS `date`,

sum(`bytes`)AS `bytesSum`

FROM

`usage`

WHERE

`server_id` IS NOT NULL

AND timestamp BETWEEN date_sub(date(NOW()), INTERVAL 2 DAY) AND date(NOW())

GROUP BY

`dd`,

`user_id`,

`server_id`,

`group_id`

ORDER BY

NULL ON DUPLICATE KEY UPDATE bytes = VALUES(bytes);

Note: ON DUPLICATE KEY UPDATE had numerous bugs prior to MySQL 5.0.38, ensure you're on a recent version before mimicking this query with your own data.

This query looks at the past two days of traffic, either inserting new records, or updating existing ones when they exist. The AND timestamp BETWEEN date_sub(date(NOW()), INTERVAL 2 DAY) AND date(NOW()) clause ensures we're looking at full days, rather than the last 48 hours (the latter would result in incorrect summaries for entire days). This keeps the summary table up to date throughout the day and ensures that yesterday's data is correct as well.

My only regret was changing some of the column names in the summary table. While "date" represents the contents of the column better than "timestamp", it did mean that column references in code had to be changed rather than just switching the table reference. Other than that the conversion has been quite quick and painless.

Having reigned in the performance of the site, it's time to look at adding new site features, and a few new products. More on those later.