Are Proxy Servers Slow?

Great Question, before we answer let’s dig into two different reasons websites can load slowly for users: Latency & Throughput.

Latency is a measure of how much time passes between taking an action and seeing the result. There’s a very small amount of latency between hitting a button on your keyboard and seeing it on the screen, so small you probably don't even notice it. On the other hand, there’s often a fair amount of latency trying to load a website from New Zealand if you're in Canada.

Throughput is a measure of how much content or information can be sent in a single action. If you hooked up a garden hose and a firehose to the same high-pressure faucet and turned them on at the same time, water would come out of them both immediately (same latency), but a lot more water would come out of the fire hose (more throughput).

Consider needing to fill up an aquarium with water when your taps are broken. You call your neighbour to ask them to bring you water to fill the aquarium. They leave their house immediately with a tablespoon of water, pour it into your aquarium, then go home to get the next tablespoon. You realize that this is gonna take longer than you're willing to wait, so you thank your neighbor and then call your brother who lives across town. He leaves immediately, arrives in 20 minutes, and starts dumping large jugs of water into the aquarium.

The neighbour was a “low latency, low bandwidth” connection. You were able to communicate your request quickly, and get a response quickly, but the rate at which you received water was low. In a web browser, a “low latency, low bandwidth” connection looks like a web page that starts to load quickly, maybe you get a headline, then more words, then the style sheet, then a picture, then another picture, then whatever. All trickling out over time (those of you who used dial up internet may remember this well).

The brother across town was a “high latency, high bandwidth” connection. It took a while for requests to go back and forth, but once data started coming in it came in quickly. In the web browser a “high latency, high bandwidth” connection looks broken initially. You may wait several seconds before anything happens, but once it starts loading, it really comes flooding in. The response to every action you take feels like it takes forever, but once it starts, it tends to load well.

What does this mean for websites

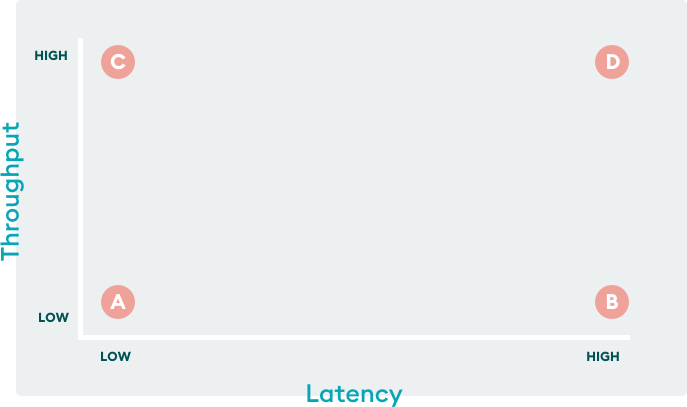

Let’s consider four possible combinations of throughput and latency, though they’re all sliding scales where every intermediate value is possible.

A - Low Latency, Low Throughput

Here your browser will probably start doing things almost as soon as you start loading the page. The page title might populate the tab of your browser, you’ll see a heading soon, but everything comes in at a trickle. Images will take a long time to load, chunks of content might display poorly before CSS loads, and it may be some time before the page is usable.

B - High Latency, Low Throughput

Worst possible situation. It may take seconds for anything on the page to start showing at all, and you’ll wonder if anything is actually happening. Once things finally start to happen, they’ll happen slowly: images will trickle in, the page might re-organize once the CSS and JS finally load, etc.

C - Low Latency, High Throughput

Best possible situation! The page will start loading almost immediately, and once it starts it keeps going. Images jump up onto the page, videos might start auto-playing (sorry), blink and you might miss it entirely.

D - High Latency, High Throughput

The website may take some time to start loading, perhaps a delay of a few seconds before you see anything at all. Once the page starts loading, you’ll see lots of content at once. You may see content appear in waves, with the first one being just unstyled headers and blocks of text,, the second wave loading stylesheets and JavaScript, and the final waving finishing off with images and media. As each new block of content arrives, what you’re viewing might change radically and quickly.

What Affects Latency

- Distance between you and the server providing the information. If a user in New York browses a secure site hosted in Sydney, they can expect to wait at least half a second before any content loads.[1] This can be a major advantage of Content Delivery Networks, which get content physically closer to end users.

- Server processing. Websites do a lot of work these days, and if you’re asking the server to search through 12,000,000 records, that might take a moment.

What Affects Throughput

- Network congestion. This applies to your home/office network, the network connection the server is using, and all the networks in between. So your network could be fine, the server’s network could be fine, but if there’s congestion somewhere in the middle it will affect throughput.

What About Proxy Servers?

Proxy servers add latency. When you send your traffic through a proxy server, you're adding an extra stop on the way to its destination, and another extra stop on the way back. While it’s possible in special circumstances for proxy servers to maintain or even improve your latency,[2] for practical purposes they'll do the opposite.

How much latency proxy servers add is largely a measure of their physical location. A user in London UK, using a proxy server in Coventry UK to browse a website hosted in Paris FR likely wouldn’t notice the difference. That same user, visiting the same site, but using a proxy server in New York would likely notice the additional latency. Luckily, as long as the server doesn’t decrease throughput, the difference won't degrade test performance.

Proxy servers do not decrease throughput, assuming ideal conditions. A well-managed proxy server should sit with sufficient bandwidth to handle proxying all incoming requests, so the throughput should not be constrained. This is clearly the camp we’re in. Most of the free/ad-supported proxy servers out there are likely throughput constrained.

Conclusion

A well-managed proxy server will add some latency to your web browsing. In normal browsing the extra latency may range from unnoticeable to slightly increased, and under some global situations may stretch up to a small delay in page load. Busy/overloaded proxy servers, on the other hand, are likely to make the browsing experience miserable. At WonderProxy busy and overloaded isn’t our style.

Building a secure connection requires the computer and server to transmit 3 messages back and forth. If you know the ping time between yourself and a server (and we have a handy global ping table) you can get a rough estimate of how long it will take to connect to a secure server by tripling the ping time. ↩︎

This seems counterintuitive, but remember that connections on the Internet are all owned by companies, and companies that are willing to pay more may receive priority treatment, or even access to better & shorter connections. A better-peered proxy server could pull the traffic to a better route than it would use normally. Joel Spolsky has a great story about using an Akamai product to speed up Copilot, their remote desktop control software. ↩︎