Test code standards for Playwright & Python

Once you get started in Playwright and Python and solve common challenges with those tests, you can crank out dozens or hundreds of tests – so it's time to start organizing them. Today we'll work through a real code sample with examples for common code patterns. We'll discuss options to organize your code, and some common patterns to run your tests under continuous integration.

If you've run through the other tutorials, then downloading and installing the sample code is a five-minute job. With the code installed, you can read and review and explore yourself – this document is just a guide along the way. So let's start with downloading and running the tests.

Running the sample code

The directions below assume you've installed Python and Playwright. You'll also need NodeJS (which automatically installs npm, its package manager). Once that's done, the instructions below set up a simple local web application, then download and execute the tests.

- Download the sample code from https://github.com/WonderNetwork/heusserm by clicking on the green "Code" button. You can clone the repository or download a zip file and expand it.

- In a terminal, navigate to the

organizationfolder in the project you just downloaded.- That folder has a subfolder,

shopping_cart_test_project, with sample tests.

- That folder has a subfolder,

- Run

npm installto download thelive-serverpackage we'll use during our testing. - Run

npm run serverto start the local test server. Playwright will run its tests against this server.- Check the server by going to

http://127.0.0.1:8080/simple-store.html#catalog

- Check the server by going to

- Run the tests.

cdintoshopping_cart_test_project- Run

pytest --headed --slowmo 250

With the files downloaded, you're free to look at the code itself, starting with the page directory.

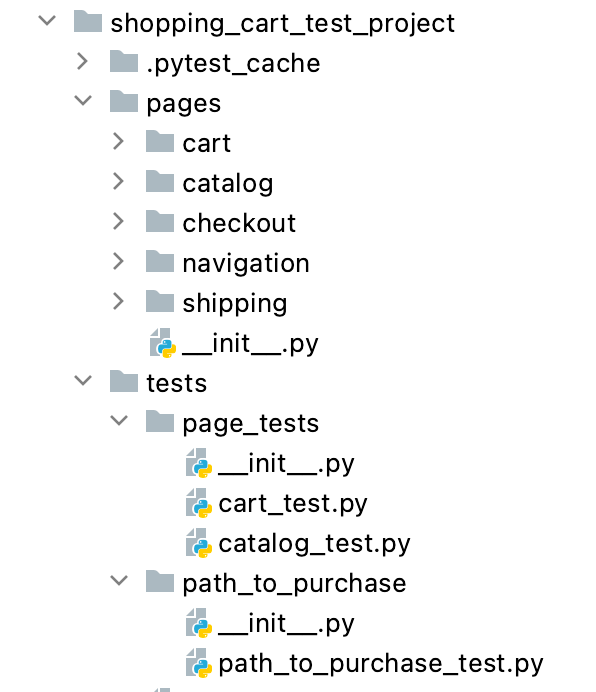

shopping_cart_test_projectNotice there is a subdirectory for every major part of the application, from shopping cart to shipping. Those subdirectories include page objects, which are Python objects that describe a "page" on the test website. With a page object, the buttons and actions are represented in code as methods, while the data on the page are represented as variables. The code for the shopping cart page object is listed below. Notice the methods get_items(), get_total_cost(), and checkout(). Having page objects makes it possible to compose a test of very high-level function calls that encapsulate page logic and read more like English. When a business process changes, the test code will only need to be changed in one place, the page object. For example, if the page changes so total cost only appears when "recalculate" is clicked, the get_total_cost() method has to change and nothing else.

Here's the code.

class CartPageModel:

def __init__(self, page):

self.page = page

def goto(self):

if self.page.url.find(base) == -1:

self.page.goto(base)

self.page.locator('#cart-menu-item').click()

def get_items(self):

cart_items = []

cart_item_locators = self.page.locator(".cart-item-container")

for idx in range(0, cart_item_locators.count()):

item = cart_item_locators.nth(idx)

cart_items.append({

"description": item.locator(".cart-item-description").inner_text(),

"price": item.locator(".cart-item-price").inner_text(),

"quantity": item.locator(".cart-item-quantity > input").input_value()

})

return cart_items

def get_total_cost(self):

return self.page.locator(".cart-total-cost").inner_text()

def checkout(self):

self.page.locator('.cart-checkout').click()

The tests themselves are Python programs that are built on top of these page objects. Those tests should be located in the tests subdirectory. Here are two sample tests checking the cart page.

@pytest.mark.cart

def test_items_added_from_catalog_appear_in_cart(page):

# setup

setup_cart_items(page)

cart = CartPageModel(page)

cart.goto()

# verify items

items_in_cart = cart.get_items()

assert len(items_in_cart) == 2

assert items_in_cart[0]['description'] == 'blinker fluid'

assert items_in_cart[0]['price'] == '10'

assert items_in_cart[0]['quantity'] == '2'

assert items_in_cart[1]['description'] == 'alternator'

assert items_in_cart[1]['price'] == '95'

assert items_in_cart[1]['quantity'] == '1'

@pytest.mark.page_test

@pytest.mark.cart

def test_item_costs_totaled(page):

# setup

setup_cart_items(page)

cart = CartPageModel(page)

cart.goto()

# verify total cost

assert cart.get_total_cost() == '115'

Running select tests

Sometimes there is value in running a full suite of tests. At other times, there is more value in fast feedback than in waiting for all the tests to execute. Even if those tests run at the same time, running an entire suite can take a great deal of cloud computing power. In other cases, the test environment might only reflect part of the application. In addition, UI tests tend to take much more time than unit tests, slowing down feedback.

One way to resolve these problems is to run a subset of the tests when there just isn’t enough time to run all the tests. One way to do that in Python is to "mark" tests. Then it is possible to run tests that match a certain "mark", such as smoke_test, shopping_cart, search, and so on. In our code example all the page tests are marked with page_test while the cart-specific tests are marked with cart.

This is an example of how those two markers show up in the code as annotations. The code uses the "mark" method of pytest.

@pytest.mark.page_test

@pytest.mark.cart

def test_item_costs_totaled(page):

# setup

setup_cart_items(page)

cart = CartPageModel(page)

cart.goto()

# verify total cost

assert cart.get_total_cost() == '115'

To run the marked tests, add the -m command line option:

pytest -m cart

On the other hand, you might want to run all the tests except for a specific marker. In that case, use the -m switch but put not before the mark name.

pytest -m "not cart"

Pytest can also run tests with either of two markers, or require both markers.

pytest -m "cart or catalog"

pytest -m "cart and page_test"

The file shopping_cart_test_project/pytest.ini has the configuration data for these markers. Every new marker needs to be registered in that file; forgetting to do this will result in a warning message when the tests run. The pytest documentation for markers has additional detail.

Keeping code and tests synchronized

On some teams with separate test and programming teams, the changes the programmers make will cause tests to fail even though the software is functioning correctly. The tests fail because the expectations for what the software should do have changed, and those expectations are codified in tests. That creates a new business process, where the developers "break" the tests and the testers fix them.

This is a terrible idea.

One way to reduce the false errors is to have the work done in different branches. Each new story or feature is developed in a feature branch, then handed to a tester who makes the tests pass before merging to the "main" branch. Better yet, have the programmers responsible for making the changes also clean up the tests before they pass them off. Testers can add new tests, but programmers need to make sure any existing tests pass. Thus "the story is not done until the tests run." With page objects, the testers can work on code snippets and check things that are "broken", but have no corresponding tests, thus the build will not fail. The tests themselves tend to be small, with less than a dozen lines of code. For more than a dozen lines of code, use helper functions that encapsulate a business process. If those helper functions are limited to a single page, push them into the page objects.

Summary

Page objects allow you to keep code DRY (Don't Repeat Yourself) and keep tests high-level and business focused. Keep the tests short and simple enough that a business person can read and understand what they are trying to do. Use filename structure to identify what tests are so you can run only the subsection that you are most interested in. If the test set becomes large, consider tagging (pytest markers) so that you can run only tests that are in a certain list, such as only the search or catalog tests. Find ways to keep the running tests passing, so that a failure represents a true failure, not just a change in the wrong place at the wrong time.

With those ideas in mind, you should have enough to build a test suite that scales to several teams, or a single long-running team.