How we ditched spreadsheets and found Testpad

Last year at WonderProxy, we decided to refactor our browser extension, the WonderSwitcher, in React.

At the time, we'd been using Google Sheets to manage our manual testing process for about four months. Spreadsheets had been working well enough for testing small and medium-sized releases. But when we decided to refactor the Switcher, we knew we'd need a better way to organize and track our manual testing, to make sure the new version of the extension maintained the same great functionality and experience our users had come to expect.

Here's the story about how we used to test our browser extension at WonderProxy, and why we made the switch from spreadsheets to Testpad, a simple yet flexible test management tool designed to make manual testing easier.

How we used to test the Switcher

The WonderSwitcher is a browser extension for Chrome, Firefox, and Edge that enables interactive localization testing right in your browser. When I joined WonderProxy in February 2018, the Switcher had only been available to the public for two weeks. We had no dedicated QA process, and any testing was done by the two developers working on the Switcher.

A few months later, I was asked to review the extension for bugs and create cards for them on our Trello board. Confession time: my background isn't in QA at all. These days, I'm a project manager at WonderProxy, but my background happens to be in librarianship. That said, my previous experience includes things like evaluating software vendors and platforms, conducting usability and accessibility tests, working with designers and developers, launching new products and services, and so on. So taking on some testing work was a pretty natural fit for me.

I poked around the Switcher a bit and made Trello cards for any functionality bugs and UX issues I discovered. When our developers started tackling some of those cards, I helped out with testing. Before long, I was testing almost every PR in our Switcher repo before we shipped any changes.

At first, I was testing PRs informally and leaving comments on GitHub or Trello about anything that stood out to me. As I started testing more builds for more complicated features or fixes, jamming my feedback into comment boxes wasn't cutting it anymore. So one day I put my results in a spreadsheet instead, and that became our new workflow.

Why we needed a better solution

Spreadsheets are a great tool when used wisely, and I've had a lot of fun making spreadsheet magic ✨ over the years. But just because you can do something with a spreadsheet doesn't mean you should. That's how you end up in Excel hell.

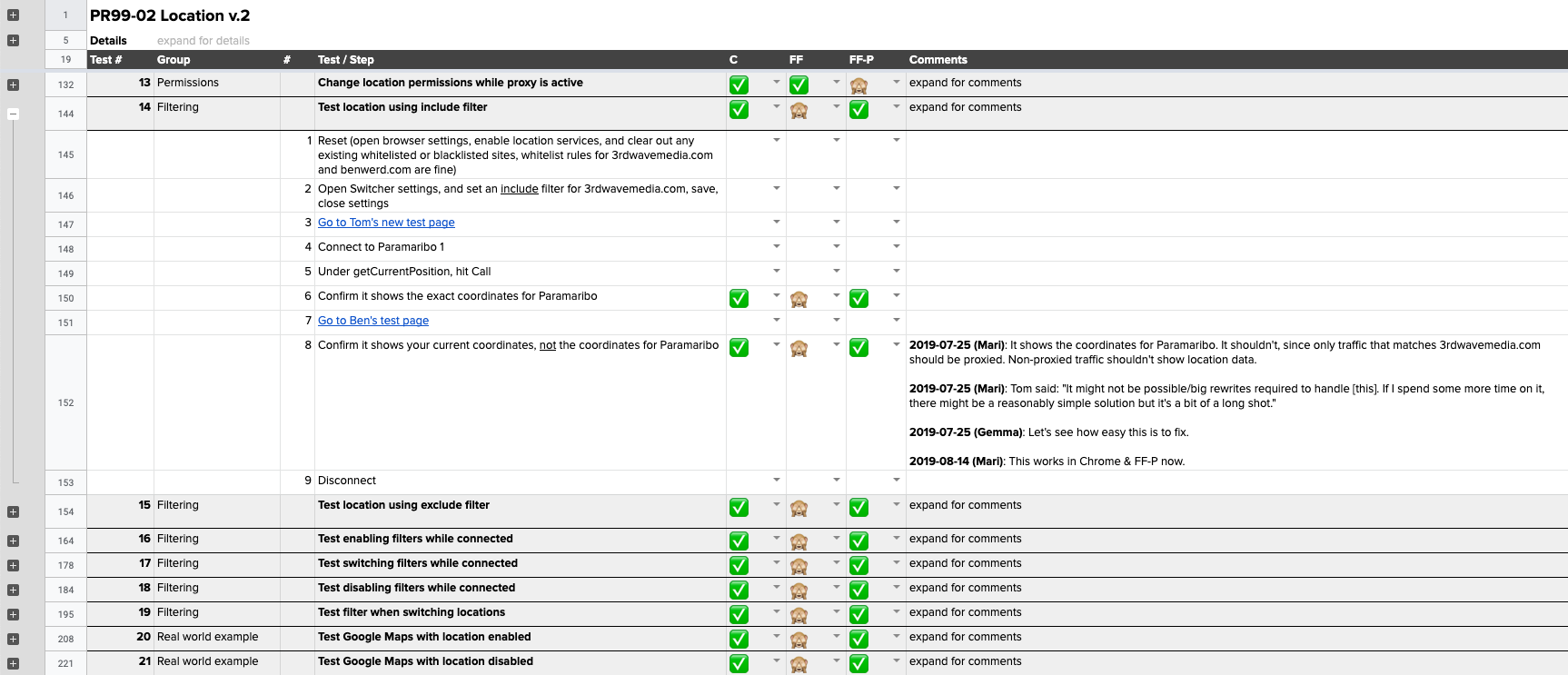

Our spreadsheet approach to test management was fine, but not great. We had a single Google sheet on our shared drive, with a tab for each pull request. After some experimentation, I developed a template that looked something like this:

- A header area with useful info and links to the Trello card, GitHub PR, etc.

- A heading row for each test case, with a unique ID, a descriptive name, and a thematic group

- Rows for each step needed to execute each test case, grouped together using the expand/collapse rows feature

- Columns for test results in each browser & browser mode

- Drop-down menus in those columns, with a highly sophisticated emoji evaluation scheme (✅ for pass, ❌ for fail, ? for questions, ? for not tested, etc.)

- A comments column

Having a dedicated place to define and document our tests was a step up from our previous ad hoc approach. I could identify appropriate test cases, write out clear and repeatable steps, track test results, and provide details in a more organized fashion. Our developers could respond to specific issues using the comment feature. Once issues were resolved, they could be marked with a checkmark, which made it easier to track our progress.

But there were also problems with the spreadsheet approach:

- Writing tests in a spreadsheet can be annoying if you don't normally work in a linear fashion. I tend to identify some obvious test cases first, flesh out some steps, then rearrange the cases around a dozen times, then add some new ones, etc. The end result in the screenshot might look reasonably readable, but that's only after cleaning them up after the fact.

- Retesting new builds and communicating the results clearly and concisely in a spreadsheet is challenging. In the example sheet above, I overwrote the test results when I retested new builds (e.g. changed ❌ to ✅), but preserved the full history in the comment cell, which made some of the rows very long and difficult to read. Other times, I just created a "v2" copy of the sheet, cleared out the history, and started again. Neither of these solutions was ideal.

- Fussing with spreadsheet templates takes time away from actually testing software. Spreadsheets are a flexible tool that can be used in many different ways. But they can also be a pain to maintain. I knew our template wasn't quite right yet, so I kept wanting to experiment with ways I could make it easier to use. But ultimately, this distracted me from what I was actually trying to accomplish: testing our product.

I continued to experiment with our template for a little while. But when we decided to refactor the Switcher, I knew we needed to start looking into a better solution for managing our tests.

Why we chose Testpad

I don't remember how I first discovered Testpad, to be honest. Either I went looking for test management tools for inspiration when I was experimenting with our template, or I stumbled on it by accident while doing research for another project. Either way, it looked exactly like the kind of tool we needed.

Some Testpad features that we found attractive:

- Simple, flexible structure: Testpad's website describes the tool as a "spreadsheet–checklist hybrid on steroids." We didn't struggle with a huge learning curve when we started using Testpad, since the basic structure is straightforward and similar to the spreadsheets we were already using, just a lot more powerful. We found it pretty easy to dive right into testing and figure out all of Testpad's features along the way.

- Intuitive writing experience: Writing and organizing tests using Testpad's free-form script editor is a much more organic experience. You can drag and drop rows to rearrange them, group rows together and create hierarchies by indenting them, comment out non-test rows using

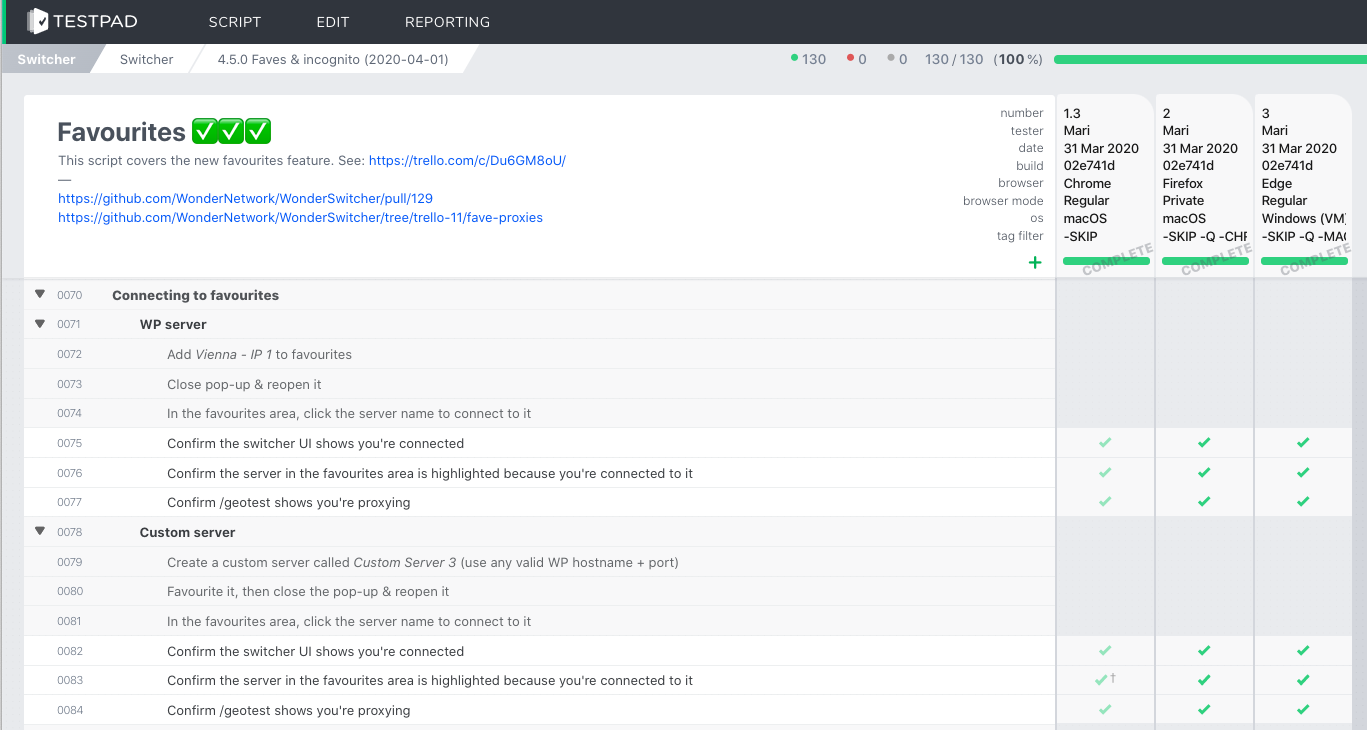

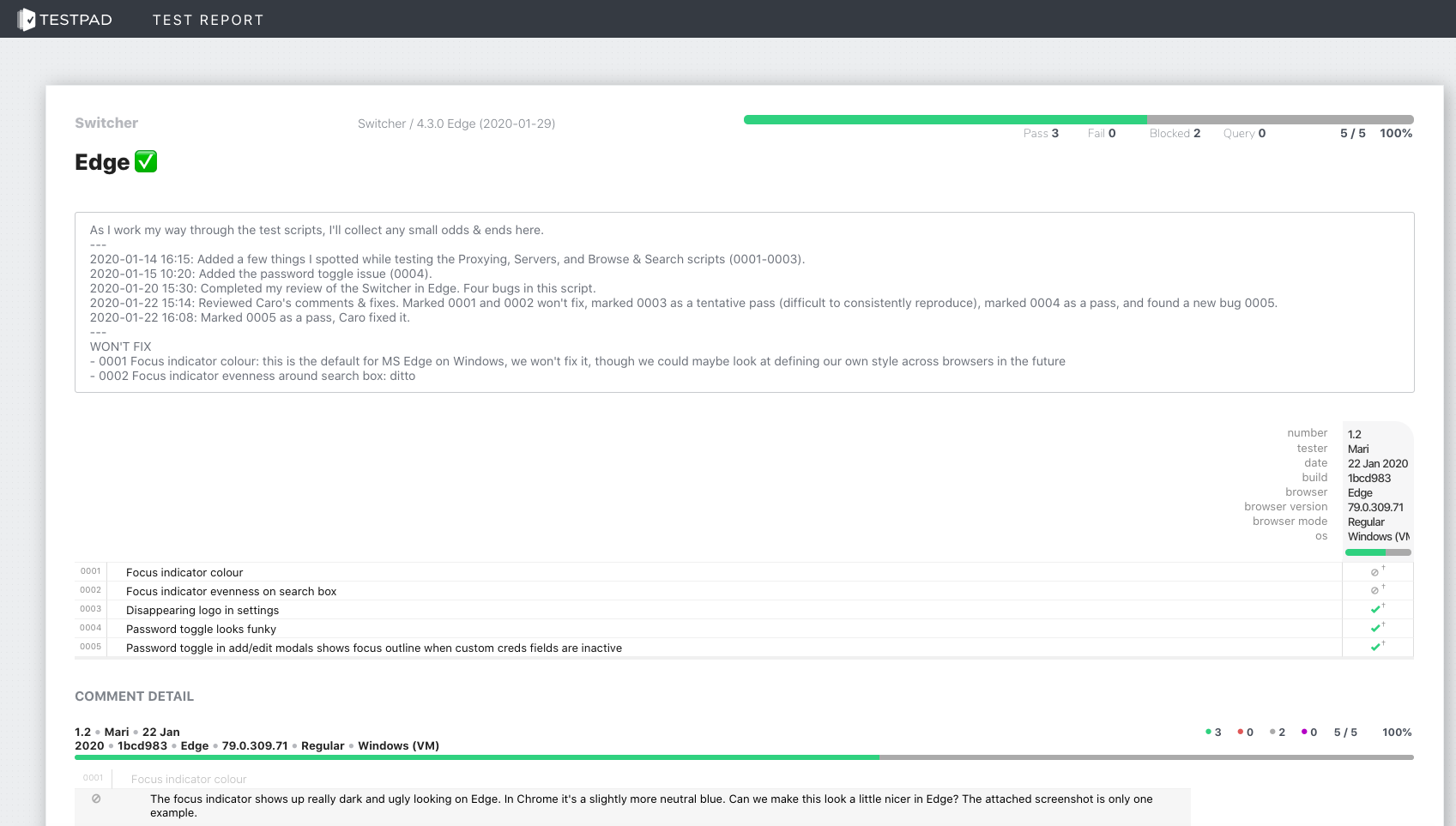

//, and so on. There's also support for Gherkin syntax highlighting, if BDD is your jam. - Test run management: Testpad supports both multiple test runs of a single script in different environments and retests of a new build in the same environment. Test runs appear in separate columns, with space at the top to provide details about the environment, build, tester, or other custom fields. Retests are new columns that replace the results from the previous build, which are still preserved but hidden by default, to reduce clutter. Testpad also has tags, which make it easy to include or exclude tests from a particular run or retest. This is handy if you need to execute different tests in different environments, modify your tests to reflect code changes, or just want to run through some smoke tests.

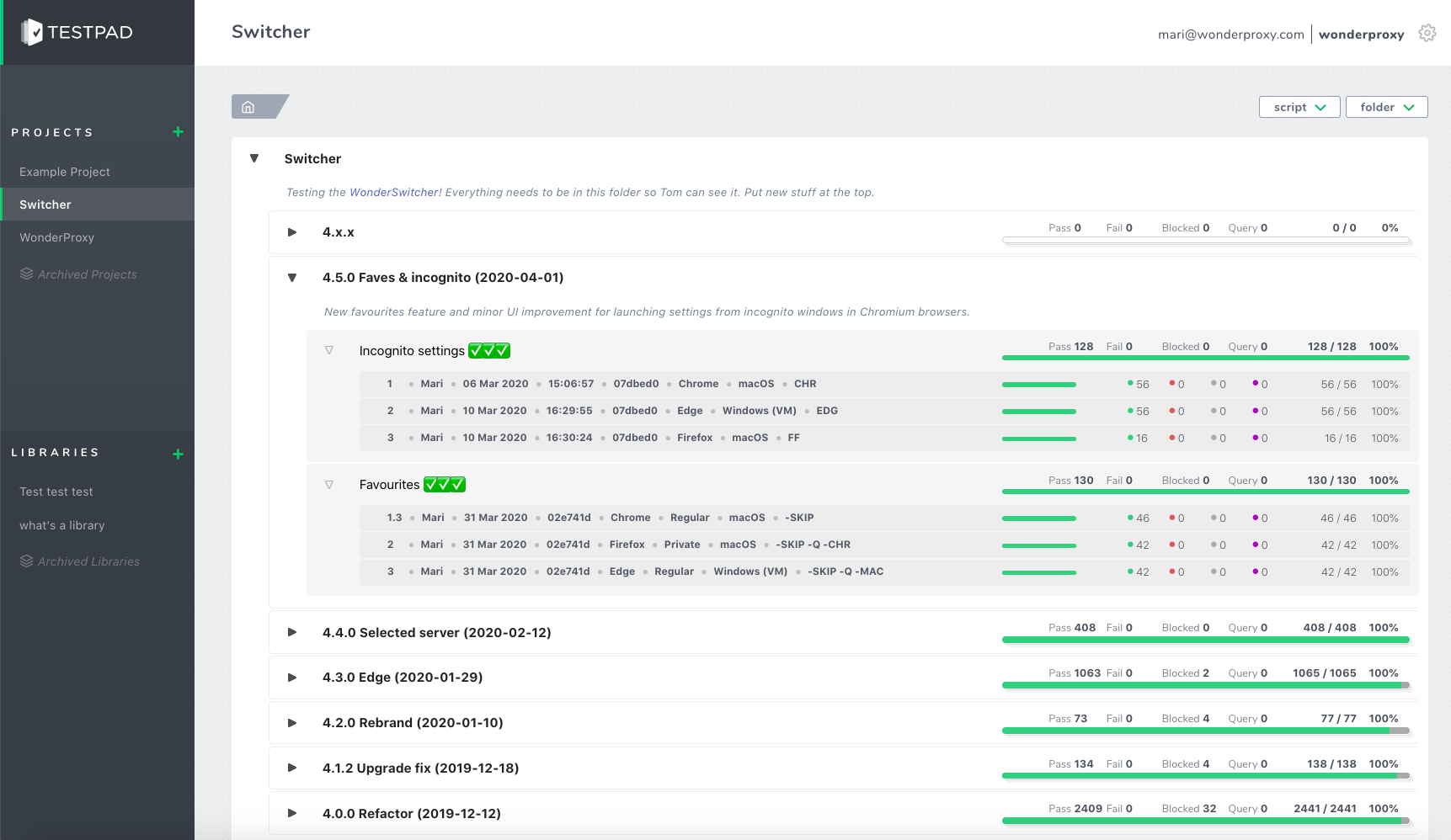

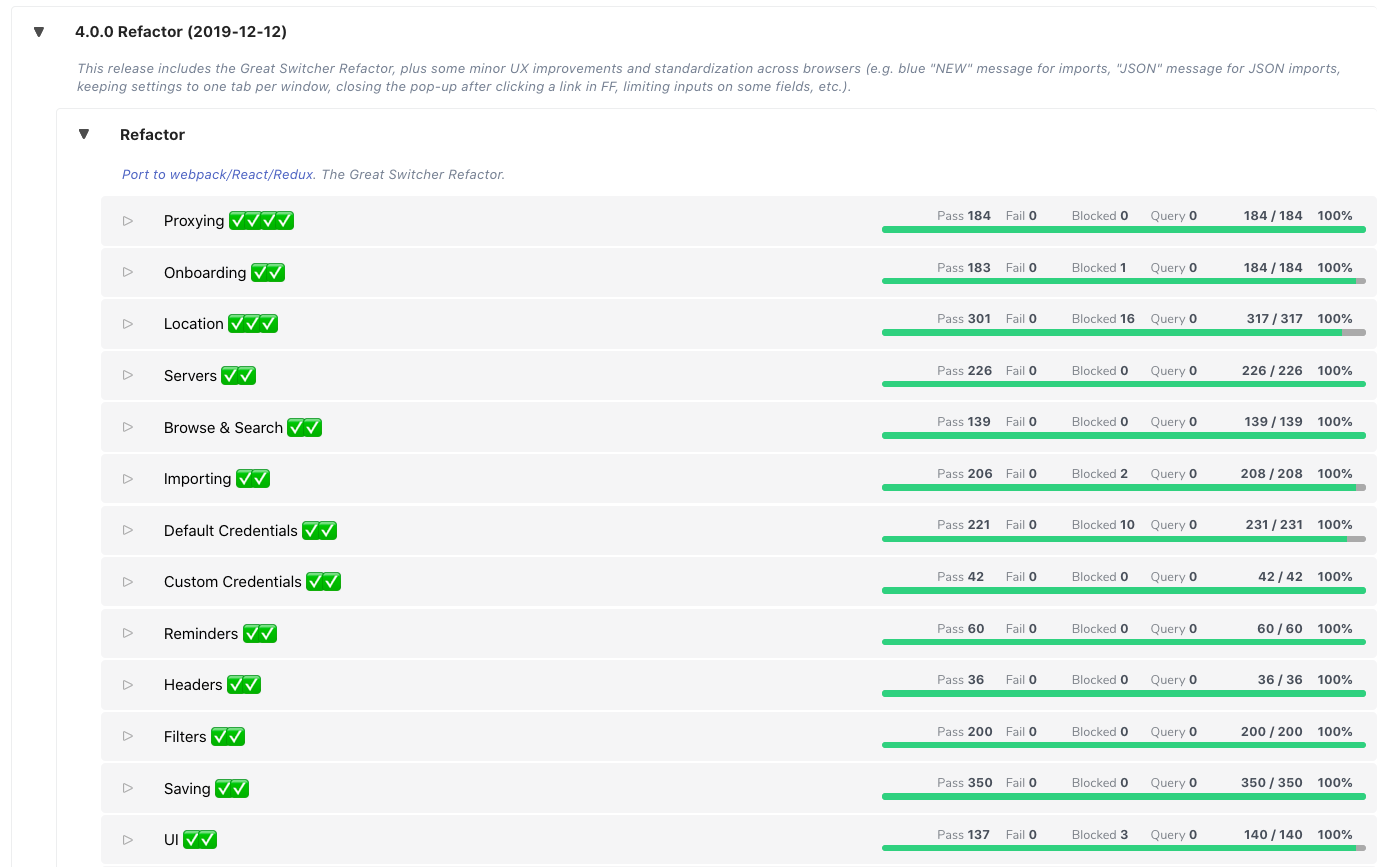

- Progress tracking: Testpad displays a progress bar on each run and each script that shows how many tests passed, failed, are blocked, were excluded, have queries, or haven't been tested yet. It also displays stats per script and folder in the project view, so you can see how a release is progressing. This came in handy during the refactor especially. It's not only useful for showing your boss how things are coming along, it's also psychologically satisfying watching the green bars grow as you work.

- Goldilocks fit: We're a small team, so what's right for a large enterprise isn't necessarily right for us. We wanted a solution that would be a step up from Google Sheets, but wouldn't be too big, too complex, or too expensive for our needs. Testpad seemed like just the right fit.

Migrating to Testpad

Testpad recognizes that many of their users may be migrating to Testpad from spreadsheets. They have built-in support for importing tests from spreadsheets, and helpful instructions for converting different spreadsheet structures to the right format for import.

Ultimately, I decided not to import our existing spreadsheets to Testpad en masse. We didn't have a comprehensive test suite that covered all of the Switcher's functionality, only tests for a few months of releases. Because we were refactoring the extension, moving to Testpad gave us a chance to start fresh and organize our tests more purposefully.

We created a Switcher project in Testpad, and set up folders for each release. Here's what our project structure looks like today:

Inside each release folder, there are either test scripts or more subfolders. For smaller releases, we usually ship a few different features or bug fixes together, so there will be a separate script for each one. Each script typically corresponds to a single card on our Trello board and a single PR in our GitHub repo. You can expand the script in the project view to show the test run details.

For the refactor, I set up about a dozen scripts to cover different features of the Switcher:

Once your scripts are set up in Testpad, it's easy to reuse them. If we extend an existing feature, I can make a copy of the appropriate script and add or modify test cases as needed. When we launched the Switcher for Microsoft Edge back in January, I copied the entire Refactor folder so I could rerun all the test scripts in the new browser. If you're reusing test scripts a lot, it's also possible to build a library of templates that can be dragged and dropped into your projects.

Manual testing with Testpad

Testpad has been working out well for us. But like any new tool, we've had to spend some time figuring out how to adapt it to our needs. Two things we've been thinking about: how to use Testpad with an external developer, and how to communicate and collaborate effectively as a team.

Working with an external developer

Most Switcher development these days is handled by two developers, one internal and one external. The external developer works exclusively on the Switcher, but Testpad doesn't currently let you restrict users to specific projects. While Testpad allows you to share a read-only version of your test results (called reports) with guests via an access token, only scripts or folders can be shared, not entire projects.

Our workaround was to stick everything in our Switcher project under a single parent folder and generate a guest access token for that folder, so our external developer can see everything we're working on. The user experience isn't quite as nice as having full access, but it gets the job done, and the parent folder trick has saved us some link management headaches. Just beware that guest reports don't show everything that regular users see, like script descriptions and test details.

Communicating and collaborating

One feature we're mourning from our spreadsheet days is comment threads. When we were working in Google Sheets, our developers could use the comment feature to respond to a particular test result or ask questions, and we could have a discussion in context. It was easy to have multiple discussions in parallel, see how many discussions were still open, and hide those comment threads when they were resolved.

Unfortunately, Testpad doesn't have support for discussion threads. We've had to shift our discussions to other platforms like Slack, Trello, or GitHub. This can make it tricky to keep track of where all those discussions are happening and what state they're in, especially since we're often working asynchronously.

There have been other collaboration challenges, too. When we were testing the refactor, we often had multiple scripts on the go at once, all in varying states. Testpad's progress bars are great for seeing how many tests have passed or failed, but less great for seeing at a glance which scripts are ready for a developer to work on, which scripts are ready to be retested, which scripts are stalled, and which scripts are truly done.

We've had to come up with our own ways of communicating the status of each script effectively. Some examples of things we've tried:

- Adding emojis and status notes to script names, e.g. ? READY FOR DEV

- Using the report comments to keep an activity log for each script

- Using the report comments to highlight any outstanding bugs

Conclusion

The truth is we're still figuring some things out. But since we migrated to Testpad, we're spending a lot less time fussing with our test management tool, and a lot more time actually testing and improving our browser extension. Having well-documented tests in Testpad has also allowed our developers to delve into setting up Jest tests. But that's another blog post!