Rich social cards for your single-page app with SlimPHP

We're PHP fans at WonderProxy. We've stuck with it through the bad times, through the trials, and finally arrived in 2021 where PHP Is Pretty Great, Actually™. Most of our public website is still classic PHP templating. Who needs Twig when you've got <?= ?>, amirite?

That's not to say we've completely shunned the 21st-century shinies. A couple years ago we replaced our user account dashboard with a single-page app (SPA) built on ReactJS. PHP still drives state and behavior with the SlimPHP framework, but everything our users touch is composed with JavaScript. It's fast, and it's slick, and it's not public so we never had to care about how those pages might show up in search engines or on social media.

Well.

A wild problem space appears

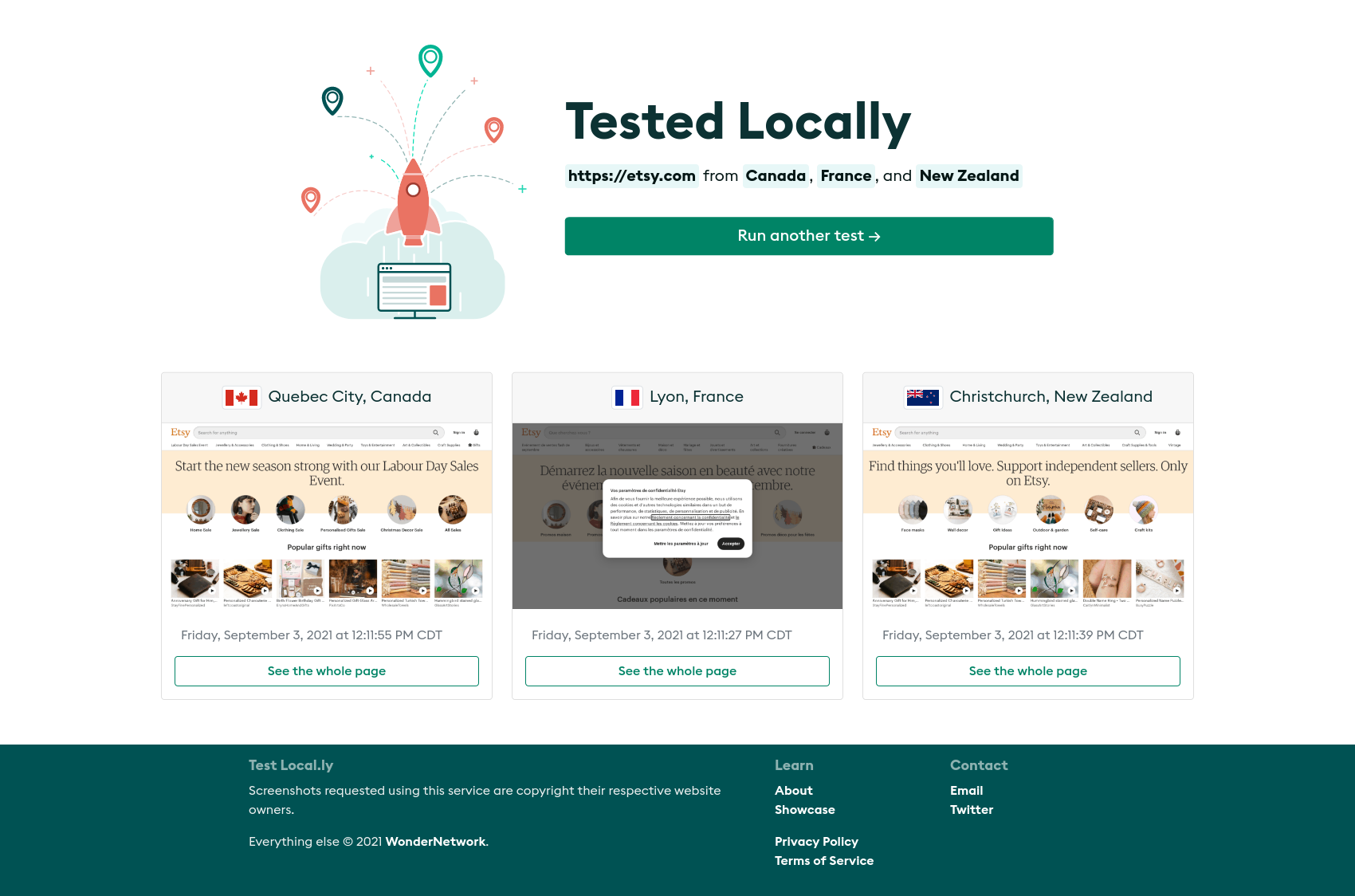

Enter Test Local.ly, a new service we just launched a few days ago. Test Local.ly leverages the WonderProxy network to take localized screenshots of websites. You can make sure Brazilians are actually seeing Portuguese, confirm that your GDPR banner is showing up in France and not Chile, or just check out how different news outlets change their headlines depending on where you are. It's neat. It's small right now, but we've got big plans for the future.

Test Local.ly is a SPA built with ReactJS (and SlimPHP in the backend again). I considered going halfsies when I was building it, only React-ifying the interactive bits and leaving the rest (like our privacy policy, or the About page) on the server side, but I started thinking about all the stylesheets and fonts and design I'd have to duplicate between the interactive bits and the static bits and recoiled in horror. Client-side work is not my jam (ironic, I know). So it's a SPA, top to bottom, and that means I needed a way to make it look pretty on Twitter.

But let's back up a minute: Why is this even a problem? Social media link sharing has evolved a bunch since the days of just-use-a-link-shortener-and-pray. You can drop any old link on Twitter or Facebook and it'll work fine, but what you REALLY want is a cute social sharing card, with a preview of the title and description and logo or image. The image is key, that's what draws the eyeballs. That cute preview is made possible by the Open Graph Protocol.

Get testing with #Ruby and #Selenium https://t.co/W0LHelxdaE#testautomation #automatedtesting

— WonderProxy (@WonderProxy) August 30, 2021

Facebook published Open Graph about a decade ago as a way to communicate website content summaries as a richer experience than regular meta tags allowed. I don't just want title and description and keywords, I want authors! Photos! Videos! With Open Graph meta tags, websites can load all that information into a format that web crawlers can read and store without parsing the whole page. The crawlers bring the rich data back to their search engines and social media networks, which turn it into nice-looking previews. Open Graph is ubiquitous now, so if you want those previews for your website, you need to populate those tags.

That's where life gets exciting if your website is a SPA. A SPA loads itself into an HTML template that's the same for every page, because the page content is determined by the SPA. When you visit the page in your browser, the rendering sequence looks something like this:

- Load the base HTML

- Load the JS that makes up your app

- Let the JS render the right content for the page

When a web crawler visits your page, it goes more like this:

- Load the base HTML

and that's it. Crawlers (except Google's) don't wait for the JS to deliver the real page content. On social media and in search engines, whatever Open Graph tags exist in the base HTML template are the tags that will apply to every page on your site.

That's ok for most of the pages on Test Local.ly – seeing generic information on the card for our privacy policy page is not the end of the world – but we wanted nicer previews for the results pages. They're the whole point of the tool, and they should look it.

So that was my problem: I have a SPA, and I want page-specific Open Graph tags that load before the SPA loads.

Option 1: Duplicate the site structure with HTML templates

The first possibility I considered was creating individual HTML templates for each page in my SPA (and by extension, duplicating the routes on the server-side). That way, no matter what a visitor's (or crawler's) entry page was, they'd get the right Open Graph data. Each template would still load the SPA, so the only difference would be the meta tags.

The problem with that approach is that the data for the page would have to load twice: once for the Open Graph tags, and then once in the SPA itself. Crawlers wouldn't care, but I'd lose some performance for human visitors.

Option 2: Server-side rendering (and pre-rendering)

I shouldn't say I considered server-side rendering (SSR), because I didn't really. SSR requires something on the server side to render the SPA into static HTML, and then deliver the static HTML like a regular page to the browser. It takes some supporting infrastructure that we don't have at our fingertips at WonderProxy, and it's overkill for social media sharing. Twitter isn't crawling my entire site looking for keywords and headings, so I didn't need to go quite that far.

Option 3, and my winner: Different strokes for different folks

Credit to Axel Wittman at Epiloge for the idea I ran with: Give the social media crawlers what they want. The crawlers want Open Graph tags, so if the visitor is a crawler, give it the Open Graph tags and nothing else. it's like Option 1 above, except the static HTML only displays for the crawlers and it doesn't load the SPA.

Setting up SlimPHP

My Slim Framework routes looked something like this:

// spa backend for async data and whatnot

$app->group('/api', function (RouteCollectorProxyInterface $app) {

$app->get('/countries[/]', function (Request $request, Response $response) {

/* return the list of countries available for screenshots */

});

$app->group('/tests', function (RouteCollectorProxyInterface $app) {

$app->group('/{id}', function (RouteCollectorProxyInterface $app) {

$app->get('[/]', function (Request $request, Response $response, string $id) {

/* return the test details */

});

$app->get('/{server}[/]', function (Request $request, Response $response, string $id, string $server) {

/* return details about one screenshot from the test */

});

});

$app->post('[/]', function (Request $request, Response $response) {

/* process a new test request */

});

});

$app->any('[/{path:.*}]', function (Request $request, Response $response) {

// other /api routes are not found, don't send to the spa

return $response->withStatus(404);

});

});

// everything that's not the spa backend gets the spa template

$app->get('[/{path:.*}]', function (Request $request, Response $response, Engine $view) {

return $response->write($view->render('index'));

});Slim sets up its routes in the order they're defined. The /api group houses my SPA backend, and a wildcard route loads the SPA (which does its own routing) for everything else. I want a special HTML template for the Test Local.ly result pages, so I created a route for it before the wildcard route:

// special handling for social media crawlers

$app->get('/tests/{id}[/]', function (Request $request, Response $response, string $id) {

/* return the crawler-specific template */

});

// everything that's not the spa backend gets the spa template

$app->get('[/{path:.*}]', function (Request $request, Response $response, Engine $view) {

return $response->write($view->render('index'));

});The template I'm using is pretty minimal: Title, regular meta tags, Open Graph data, and some structured data for good measure:

<?php

$prettyCountries = implode(', ', $countries));

$description = "Screenshots of $target as seen by visitors in $prettyCountries, using geolocation";

$title = "$target on Test Locally";

$image = $image ?: "$host/favicons/android-chrome-192x192.png";

?>

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta name="description" content="<?= $this->e($description) ?>">

<title><?= $this->e($title) ?></title>

<meta property="og:site_name" content="Test Locally" />

<meta property="og:title" content="<?= $this->e($title) ?>" />

<meta property="og:type" content="website" />

<meta property="og:url" content="<?= $this->e($uri) ?>"/>

<meta property="og:image" content="<?= $image ?>"/>

<meta property="og:description" content="<?= $this->e($description) ?>"/>

<!-- Organization structured data -->

<script type="application/ld+json">

{

"@context": "http://schema.org",

"@type": "Organization",

"name": "Test Locally",

"description": <?= json_encode($description, JSON_UNESCAPED_SLASHES) ?>,

"url": "<?= $host ?>",

"image": "<?= $image ?>",

"sameAs" : [

"https://twitter.com/testlocally",

"https://ca.linkedin.com/company/wonderproxy"

]

}

</script>

</head>

<body>

<h1><?= $this->e($title) ?></h1>

<p><?= $this->e($description) ?></p>

</body>

</html>You'll notice that there's a bunch of test result data in there. That needs to come from the Slim route handler through the stellar Plates templating library (magically injected into the handler with PHP-DI):

// special handling for social media crawlers

$app->get('/tests/{id}[/]', function (Request $request, Response $response, Engine $view, string $id) {

/* ...grab the test data... */

return $response->write($view->render('social-metadata/test', [

'host' => $request->getUri()->getScheme().'://'.$request->getUri()->getHost(),

'uri' => (string) $request->getUri(),

'target' => $target, // the target URL

'countries' => $countries, // the countries tested from

'image' => $thumbnail, // one of the thumbnails from the test

]));

});So far so crispy. The test results page has been replaced by our minimal crawler template.

Redirecting the rest of us

You and I want more than that though, so we need to make sure only crawlers get the special template.

First, I had to decide which social media networks we cared about. Where are Test Local.ly test results most likely to be shared, based on what we know about the audience for random tech tools?

- Slack

- Mastodon (ok this one is just because Mastodon is cool don't @ me)

The crawlers for all those social networks broadcast their identity in their User-Agent string, which is a standard HTTP request header that most browsers and web clients use. Slim has a nice interface for grabbing HTTP request headers, so that's what I'll use to check for the crawlers.

$crawlers = [

'https://api.slack.com/robots',

'Twitterbot',

'facebookexternalhit',

'facebookcatalog',

'LinkedInBot',

'Mastodon',

];

$agent = $request->getHeaderLine('User-Agent');

foreach ($crawlers as $crawler) {

if (strpos($agent, $crawler) !== false) {

/* it's a crawler, send the special template */

}

}I could dump that check right in my special route handler:

// special handling for social media crawlers

$app->get('/tests/{id}[/]', function (Request $request, Response $response, Engine $view, string $id) {

$agent = $request->getHeaderLine('User-Agent');

foreach ($crawlers as $crawler) {

if (strpos($agent, $crawler) !== false) {

/* ...grab the test data... */

/* return the special template */

}

}

// not a crawler, so render the spa

return $response->write($view->render('index'));

});but if I decide I want any other pages to be cute on social media, I'd have to keep doing the same thing in every route. You may have picked up on this already, but I'm allergic to duplication. It raises my blood pressure. It's a problem, but not the problem I'm solving today, so I started digging into Slim's middleware functionality.

The middleware of it all

In classic microframework style, Slim lets me attach a middleware handler to almost any part of its routing system. Middleware runs before and after the actual route handlers, so you can modify the request or do some validation before, and modify the response or do some logging after. Slim processes its middleware onion-style, last in/first out (LIFO), so the last middleware handler that gets attached is the first one that runs. I already have some middleware attached to give me error handling and some rate limiting, so I'll attach my check-for-crawlers middleware directly the to relevant route:

// special handling for social media crawlers

$app->get('/tests/{id}[/]', function (Request $request, Response $response, string $id) {

/* return the crawler-specific template */

})->add(function (Request $request, RequestHandlerInterface $handler) {

/* check for a crawler and either continue processing the crawler route or render the spa */

});I'll drop my check for crawler user agents in the middleware before the route runs:

// special handling for social media crawlers

$app->get('/tests/{id}[/]', function (Request $request, Response $response, string $id) {

/* return the crawler-specific template */

})->add(function (Request $request, RequestHandlerInterface $handler) {

// run this route handler if it's a crawler

$agent = $request->getHeaderLine('User-Agent');

foreach ($crawlers as $crawler) {

if (strpos($agent, $crawler) !== false) {

return $handler->handle($request);

}

}

/* render the spa */

});and here's the bit that broke my brain a little: Instead of creating a new response and delivering the SPA template in the middleware, I can reuse the route that already delivers the SPA template! I'll give the SPA route a name, and then use that name to find and run it directly from the middleware:

// special handling for social media crawlers

$app->get('/tests/{id}[/]', function (Request $request, Response $response, string $id) {

/* return the crawler-specific template */

})->add(function (Request $request, RequestHandlerInterface $handler) use ($app) {

// run this route handler if it's a crawler

$agent = $request->getHeaderLine('User-Agent');

foreach ($crawlers as $crawler) {

if (strpos($agent, $crawler) !== false) {

return $handler->handle($request);

}

}

// THIS WORKS?!

// (☝ literally in prod right now)

return $app->getRouteCollector()->getNamedRoute('spa')->handle($request);

});

// everything that's not the spa backend gets the spa template

$app->get('[/{path:.*}]', function (Request $request, Response $response, Engine $view) {

return $response->write($view->render('index'));

})->setName('spa');Technically, closing over the $app variable that way is probably not advisable, from a general encapsulation perspective, but it works, and I figured it was marginally better than making a bunch of static calls to routing internals that were never intended to be used this way ?. We're a scrappy startup! Best practices are for the birds! And enterprises!

Robots and humans in harmony

With my special crawler route and template, and the crawler middleware giving it a bear hug, my test result pages now look like this on social media:

https://t.co/PnNO8ZaN8p goes well beyond languages and GDPR banners when it comes to localizing their home page. The product listings are distinct, sure, but so is the pitch! https://t.co/mVxyEPJIY7#localization #geoip

— testlocally (@testlocally) September 3, 2021

and like this when you visit them in person:

We may need to rethink the solution if we decide we want rich previews on DuckDuckGo or Bing, but for now, Test Local.ly is sorted. We'd love to hear about your solutions (or hilarious failures), so hit us up on Twitter with your stories!

I hope you all appreciate the mountain of self-restraint I demonstrated by not filling my headers with SPA puns. You're welcome.