Test websites with skill: beyond button-clicking and script-following

Why testing gets no respect

It's not uncommon for me to get a call to put together a testing team. Until recently, geography mattered when it came to staff, and the commuting community around an office isn't that large. Too often the company would want to pay peanuts, then be disappointed when they got monkeys. In the rare event I found a diamond in the rough, the company might be impressed - then try to hire that person into a developer position! In other words: When we hire and expect low-quality staff, the result is low-quality testing.

The other possibility is something I call the west coast school, where the organization does not have any testers at all, but instead generalists with a broader skillset that can take an application from concept to cash. That's fine by me; I am less interested in testing-as-job and more interested in testing as an activity. Most companies get there because of the same self-fulfilling prophecy; low-paid testers that either "play" with the software, perhaps boring pre-written scripts that are slow and don't add much value, or, sometimes, expensive and brittle user interface tests that don't find many bugs.

All of these are really problems with testing as much as testing done poorly. I propose an alternative to doing it poorly. Here goes.

Good testing done well looks a lot like good chess. It may not be defined upfront, but it is definitely a skill. It is tempting to think of good testers as lone geniuses who think differently, but as it turns out testing, like chess, can be observed, reflected on, studied, and taught. Today I will break down elements of a test strategy and provide resources to help you develop one that is unique to the risks in your organization.

Regression testing vs. feature-testing

A lot of the thinking about testing, what little we have, is influenced by the waterfall development of a previous century when we used to physically ship disks in the mail. Those were the days before Extreme Programming, Test Driven Development, and Continuous Integration existed. We had to re-test everything at the end, to make sure a change in one thing did not break something else, making sure the software did not regress. That was a good economic decision at the time. A bad disk set would cost more than tens of thousands of dollars to resend; it could destroy the company's reputation in the marketplace.

At the time, back in the bad old days, we would write a specification for the whole project. Later we would have integration, when nothing would work, and we would test things into being good enough, burning down the specification. The next release would be another big project, and at the end we would regression-test everything.

Today things are unlikely to work that way. Most companies I work with write stories; it is unlikely there is a "specification" source of truth for the software. With each story, we test just the new functionality, called feature-testing. If the heavy bugs are regression bugs, that is a software engineering problem. The code should be composed of small independent units that can be deployed separately and only break a small piece at a time. Still, until you figure that out, it would be best to have a strategy that covers both.

So let's start with getting really good at feature testing.

Testing Features: Failure Modes and Quick Attacks

For our purposes a feature is a small, independent piece of functionality and the things that surround it. A pop-up screen that impacts the text you are editing in Google Docs, for example, might be a feature. Testing that would likely include the feature, the screen that is the result, but also waiting five minutes and reloading the screen to make sure that when the page re-loads the change appears.

How did I know that?

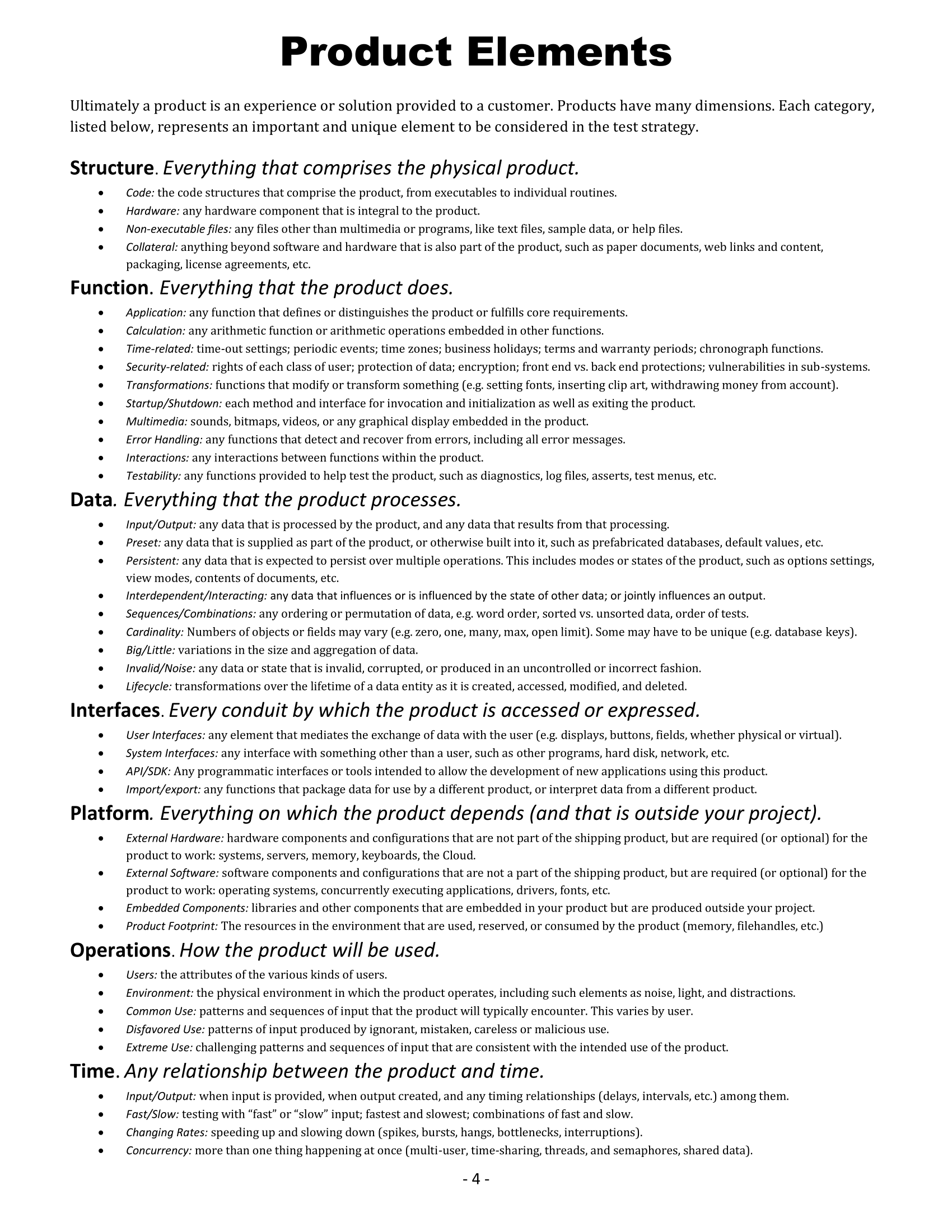

James Bach and Michael Bolton's Heuristic Test Strategy Model is one place to start to gain the skill to see that kind of problem. It contains several guideword heuristics, or rules-of-thumb, to help the reader come up with what might go wrong and what to check. Their most famous mnemonic is likely "San Francisco Depot", a way to remember "Structure, Function, Data, Interfaces, Platform, Operations, Time."

Personally, I like to focus on failure modes - the common things that go wrong with the platform - and quick attacks. Quick attacks are common ways to overwhelm the software to see if it breaks under unexpected conditions. Anyone who studies quick attacks for web or mobile can find ways to check the software for failure without even understanding the business rules. One good version of quick attacks is platform failures. For example, while we don't test for dropping network connections (and slow wireless) so much for laptops, that is a common issue for mobile software, along with heat or multitasking issues.

The last piece of the puzzle is those pesky business rules. There is an entire body of knowledge called domain analysis that takes the business rules into account and selects a few of the most powerful tests to execute the most code. To do this we might create a tree or table with different classes of inputs, then test combinations of classes, nodes of the tree, and boundaries around the classes that we have time for.

That's the strategy. Let's talk about how to execute it.

Exploration, Recipes and Scripts

Earlier I implied scripted testing is a boring way to not find important bugs slowly. That's probably because it's true. To put that differently, a skilled person writing things down on a piece of paper two months ago is going to be beaten by a skilled tester every time. As it turns out, the best test ideas, like the best chess moves, often occur in the moment of testing the software, in response to something unexpected. Years ago we called this "exploratory testing", to contrast with scripted testing. Today we are more likely to call it "actually testing" - see James Bach's comment on his dropping the term, back in 2015.

In our work we often find that there are some things worth writing down. How to set up complex accounts and transactions, SQL commands to populate databases, there is often a need for explanations. Sometimes I call these "recipes", and I might describe them on a wiki, with a mindmap that links to those wiki pages. The example above is a real list of features, colored as to quality (red, yellow, green) used to make release decisions for an application that still needed regression testing.

We're winding down, yet I haven't talked about automation yet.

Tooling and Automation

Most of the teams I work with are doing poor testing, getting poor results, so they want to jump to automation. I suppose you could argue that automation would just get them poor results faster, but there is another, hidden risk. At the end of every one of those boring test case documents is a hidden expectation "and nothing else odd happened." As it turns out, computers are remarkably bad at checking for that.

Don't get me wrong, I am not opposed to tooling and automation. I'd prefer to start with things that are done exactly the same every time -- setup, build, load test data and, in some cases, deploy. Once that is done, if the software still needs regression testing, a good smoke test suite can find the bugs minutes after they are committed to version control, not the hours or days a human tester might take. The CI system can figure out who made the change and notify them, so the programmer can get right back in and fix things. Careful selection of what to add to the test suite, perhaps as a testing step before the story is considered tested, can continue to prevent regressions from escaping the programmers.

There are other things that a computer can do almost exactly the same every time, but perhaps a bit different, such as running all the same Graphical User Interface (GUI) tests with a small change. Perhaps you'd like to run them against every possible language for internationalization, for example. Those sorts of things can be incredibly powerful uses of automation.

A common problem with automation is that it requires programming, which means we need programmers, but if they are good they'll be poached to work on the main software, leading us to our peanuts and monkeys problem. Although I was skeptical at first, I find some of the new "adaptive" codeless frameworks are becoming good enough to use for regression testing.

Putting it all together

Today I focused on the human experience of the software, what you might call the iceberg above the surface. Below the surface we have APIs, code, unit tests and so on. Developers who write effective unit and integration tests can continue to ratchet up the quality before things ever get to test. A good test strategy will balance standard checking with human exploration, moving the standard checking work to the computer. My experience is that balance often works best with the computer checking for regression and the human doing the feature-testing.

One area that sits in the middle, between regression and feature-testing, between repeatable and not, is that long walk of the user journey, from signup to checkout. These are repeatable and boring, but if you have the computer do it, those tests tend to be brittle and leave the data in a particular state (and a different state if something goes wrong), making them difficult to debug. Getting the right balance of automation and human testing can be tricky here. My preference is to slice the journey into small elements, called Dom-to-Database tests, and have the computer run those, while leaving the complex user journey to a human.

Testing is only a part of quality, but it can be a big part of solving today's problems, and point to tomorrow's solutions.

How are you doing?