TestBash SF 2018 Recap: Day 2

This is a recap of the second day of TestBash from San Francisco's Marina District at the Cowell Theater November 8-9th. You can also read about the first day.

Day 2 Presentations

Kim Knup on “Stories from Testing-Voice First Devices”

IoT devices like Amazon’s Echo have no visible interface, which can complicate your testing strategy if you are used to having a GUI. How do you test something that uses voice as an input and output, has no buttons, and wants to imitate a natural conversation? Kim outlined her strategy of testing an Echo app also called a “skill”. First you need to understand the “interaction model” which consists of a wake word + an invocation name + intents. The Echos currently have four wake words (such as “Alexa”), followed by the invocation name (skill or app name), and possibly followed by the intents (or core functionality of your skill). Once you understand this interaction model, you can test a “skill” with the following methods:

- Text and voice dev tools allow you to test inputs and outputs as text which can be good for debugging and quickly verifying bugs

- Role Playing by talking to your device early and often to experiment how it handles the various commands

- Creating Decision trees and visualizing potential paths through logic you can take while giving the device voice commands including using cheat itents to skip ahead or back

- Real Life with real user groups of diverse people (turns out accents are a problem).

Michele Campbell on “How to defuse a bomb.. I mean a bug”

Michele says testing can be stressful sometimes, especially when someone comes back and asks why the team missed a bug. She shared how her company tries to prevent this idea of “not catching bugs”:

- Try to prevent the bug.

- If you can’t, maybe you can defuse it.

- If you can’t defuse it, maybe you can try to contain it.

- If that doesn’t work you have to clean it up.

Preventing bugs from getting into production comes from training people on a product (her company spends more than a month on this), having working automated tests, giving teams the tools they need, and pair testing where any two team members (Dev, Prod, QA, UX, PM) sit together at one keyboard and test the product with the goal of helping the developers explore their thinking. If the code gets merged but hasn’t been shipped and somebody finds a bug, you can defuse it (and the situation) by taking a step back and evaluating what happened using charters and session based testing. With this additional information you can see just how big or impactful the bug might be and determine when to fix it. When the bug gets shipped to production, it’s time to contain it through a bug triage; that way if someone complains, the fix has been prioritized (or not). Finally if a customer finds it, it’s time for a bug scrub. The team goes through the specific area of the product looking for more bugs, and then pushes them up in the bug backlog to be fixed.

Jason Arbon on “AI Means Centralized Testing is Inevitable”

Jason is co-founder and CEO of test.ai. He co-wrote the book “How Google Tests Software” after working for Google. He also worked for Microsoft, focusing on testing at scale by using neural networks to find bugs in Bing. As a former tester, while he understands the skepticism around AI and its ability to test, he made the case that AI makes testing reusable and possible at scale. It can look for generic features and use its speed to run through tens of thousands of tests in the time, while also getting feedback from humans on how well it is doing. That’s the big thing about AI and Machine Learning in its current state: how do you train the machine without hard-coding it? You do something called reinforcement learning: everytime the computer runs a test (or scenario), you tell it how it did. Then you continue to let the machine iterate thousands of times until it has a well-built model of how to test something. How does the move towards AI mean centralizing testing? Since many parts of your application aren’t special (you’ve got a sign in screen, search, etc.), an AI bot can be applied to your application from a centralized spot and find bugs. When a human is included in the loop to fill in the details the bots miss, all of a sudden you have tests that don’t need to be written twice because a centralized set of tests will work.

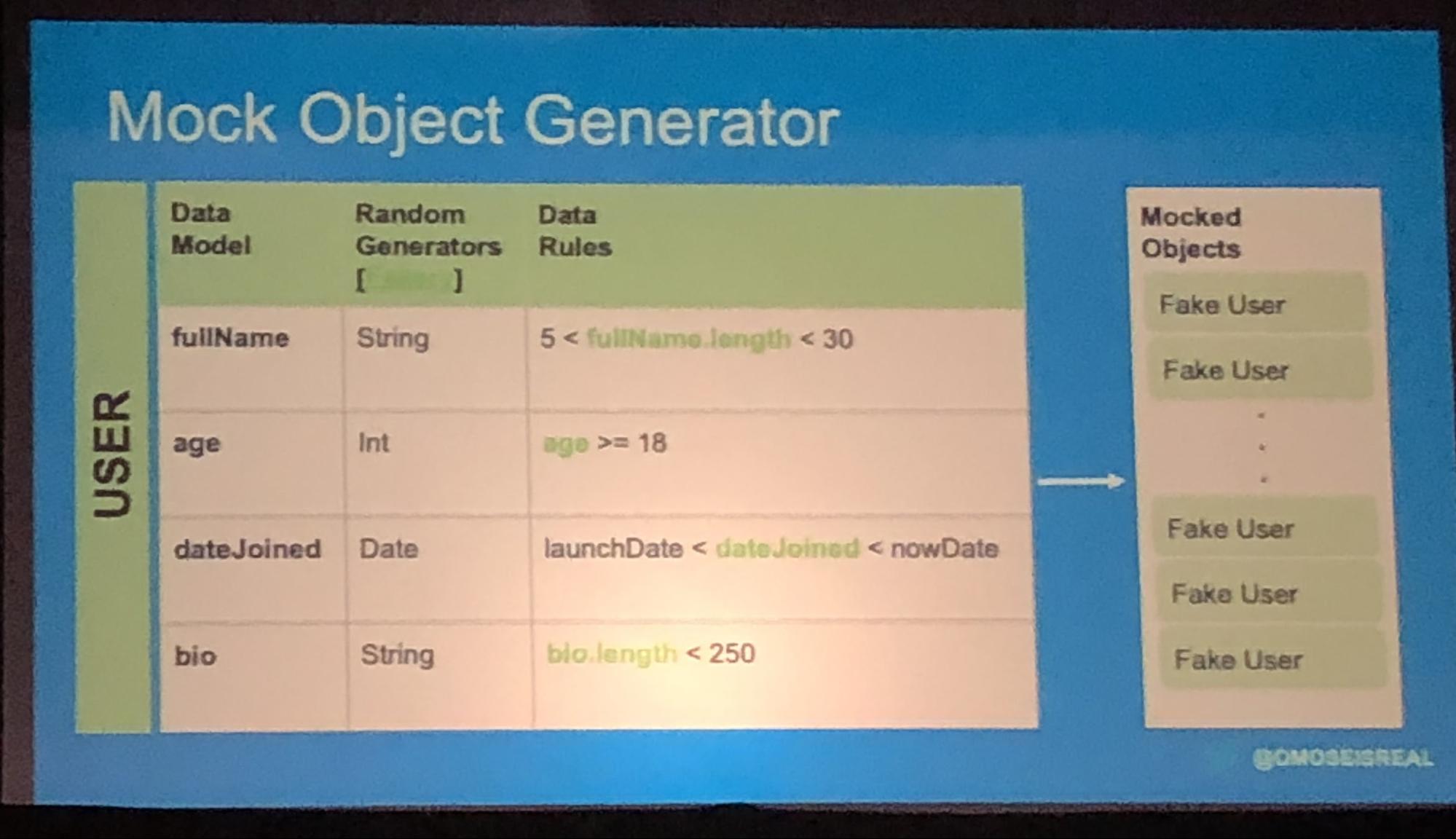

Omose Ogala on “Techniques for Generating and Managing Test Data”

Omose writes test automation for Twitter’s mobile application. One challenge is coming up with test data that looks and acts the same way real data does. This becomes more challenging the “smarter” your application is, because the data is constantly changing. So how do you generate test data that looks and acts like the real data does? First you can copy real data. Imagine using Postman to make an API request, copying the JSON response, and then using the response data in your application. If you don’t want to copy your test data (or you want an easier way to generate it) the second thing you can try is to fake the data. To fake your data, you need to understand your data’s structure, the restrictions put on it (often by the back-end), and what makes your data valid. Then you can fake it either by using a mocked JSON file (such as you might do when you copy a response) or using a mock object generator.

Amber Race on “How I learned to Stop Worrying and Test in Production”

Amber walked us through what it was like as her company, Big Fish Games, got up and running with monitoring so they could be sure they were testing the right things when they did test in production. There are some fairly common tools available today to help companies gather, store, analyze and display their data. If you’ve heard of the term ELK Stack, it’s become a popular option for monitoring. Big Fish used a different stack, Graphite and Grafana, for its solution. After deciding on the stack, they needed to figure out what to collect, so they started tracking specific APIs (for request counts, failures, response Times), Memcache Usage, Thread Counts per Machine, Exceptions, 3rd party response, System Stats, DB Stats and more. Once they started monitoring these things, they quickly found a number of issues, go figure. With monitoring in place (looking for known unknowns), they began to look at the benefits of observability (looking for unknown unknowns). Today Amber is known to do lots of testing in production including chaos testing, configuration testing and even performance testing.

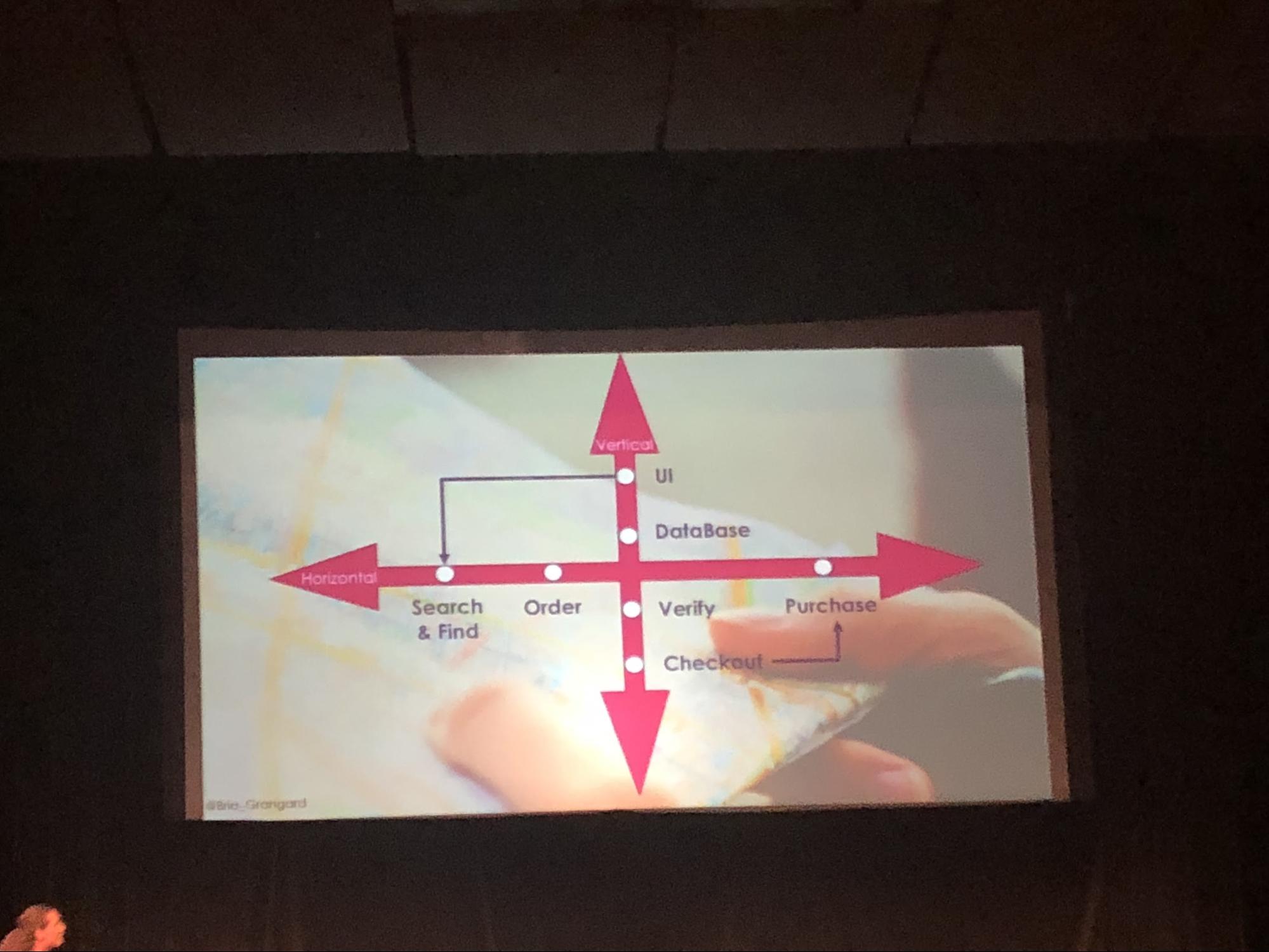

Bria Grangard on “Testing the Front-End, Back-End and Everything in Between”

Bria gave the audience advice on how to approach testing an application wholistically, and introduced a new model for looking at end-to-end (e2e) tests. In order to test the front-end and back-end of an application, Bria asked the audience to do the following:

- Focus on the user experience

- Consider functional and performance aspects

- Do horizontal and vertical e2e testing

- Take a top down approach

- Create metrics for success.

Focusing on the user experience means being able to simulate real user scenarios as a way to expand test coverage and detect bugs. Horizontal e2e testing is similar to focusing on the user, in the sense that you want to follow a workflow or a user journey from start to finish. Vertical e2e testing is about testing each layer or sub-system of an application’s architecture independently for bugs. By approaching your e2e testing as both vertical and horizontal you can model and test the best of both worlds. Finally, make sure you’ve got your metrics for success whether it’s information on environments, or test progress, etc.

Melissa Eaden and Avalon McRae on “Getting Under the skin of a React Application - An Intro to Subcutaneous Testing”

Both Avalon and Melissa were working at Thoughtworks on a project for a scheduling application, and they noticed their testing framework wasn’t helping out much. They were using a JavaScript based Selenium UI framework but it was big and unwieldy and wasn’t maintained well. If you have unit tests and full-stack tests only, then it's hard to tell where the problem is. Instead, they decided to take a different approach. They found that writing a test that integrates the front-end code without hitting the API gave them more actionable results. So as a team, they worked to define subcutaneous testing as “...a single workflow which can be identified and tested, of the application code...” Subcutaneous testing would treat a client-side workflow as a testable unit. The presentation weaved nicely in and out of code examples for what they worked on. What they found was subcutaneous testing shifted their coverage to focusing on application health, integration stability, browser compatibility, and accessibility in a way that e2e and unit testing wouldn't have been able to accomplish.

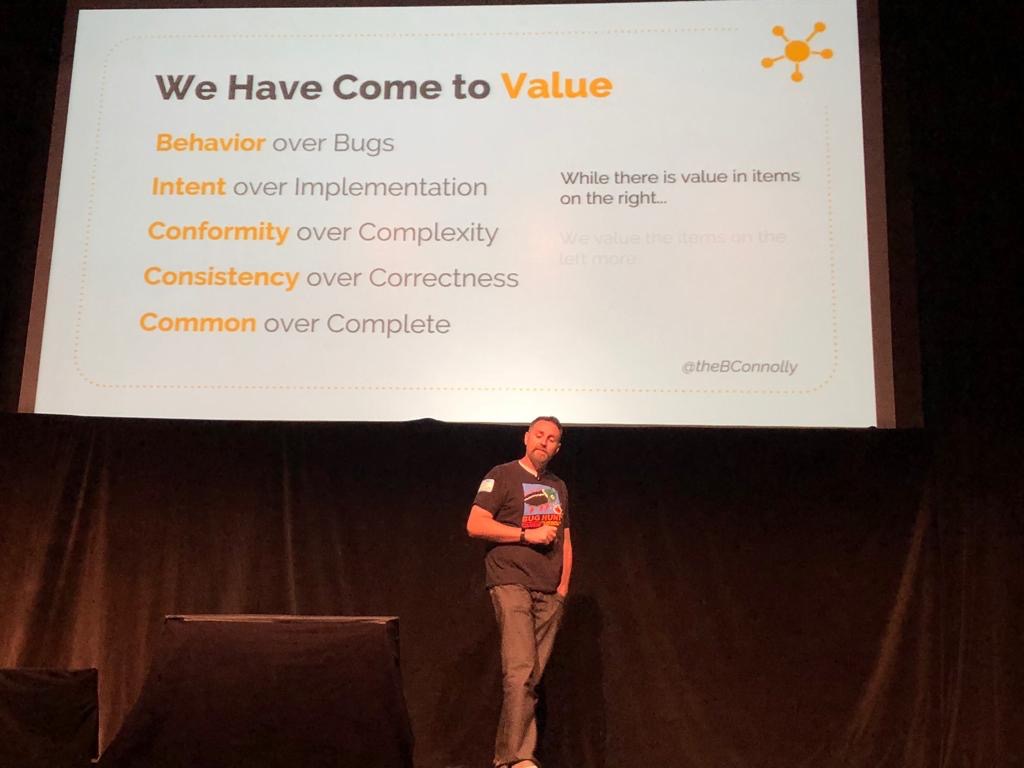

Brendan Connolly on the “Manual Regression Testing Manifesto”

Agile principles provided a foundation to understand this new movement towards faster delivery. It’s about time we had something for regression testing. That manifesto is Behavior over Bugs, Intent Over Implementation, Conformity over Complexity, Consistency over Correctness and Common over Complete. The goal of this model is to help engage and create meaningful conversation to better promote understanding of why we regression test in the first place.

99 Second Talks

TestBash is famous for its 99 second talks, which is exactly as it sounds. You can either sign up ahead of time or simply lineup when they happen. Anyone can present in front of the audience for a grand total of 99 seconds. At least 20 people gave talks about a wide variety of subjects including me. I got through most of the Modern Testing Principles before running out of time (watch the video). It was a great way to end the conference!

A Very Good Conference

All in all TestBash SF was a lot of fun and a very good conference. If you’ve read through either or both of these posts you can see the volume of talks is such that you can’t walk away without having been exposed to some interesting and hopefully new ideas. Other conferences might have longer workshops or a better focus on conferring + discussion, but you can walk away having only been exposed to a small number of talks + ideas, and having had to make tough decisions about what to skip. With TestBash you get to see everything that happens and no one misses out. If you are sad you missed out on TestBash SF 2018 don’t worry you can view several free presentations on The Dojo or subscribe and watch them all!